BVI-Artefact: An Artefact Detection Benchmark Dataset for Streamed Videos

University of Bristol

About

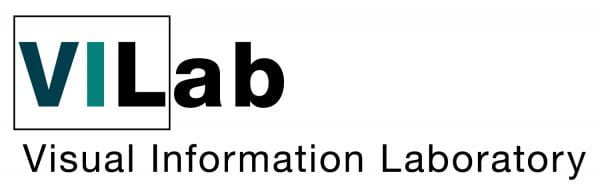

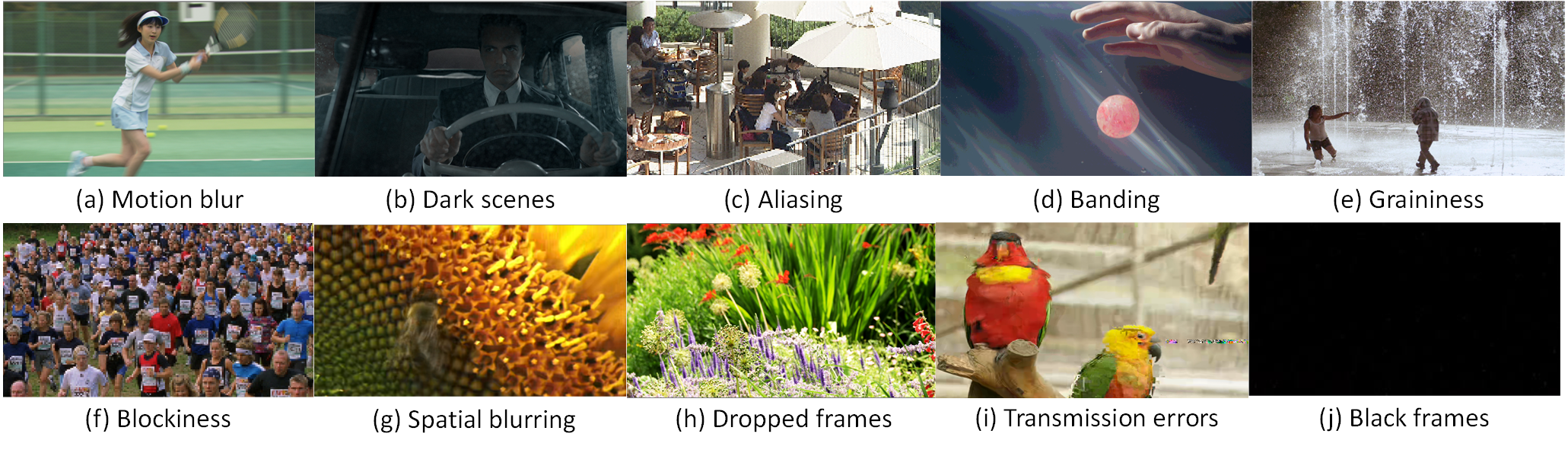

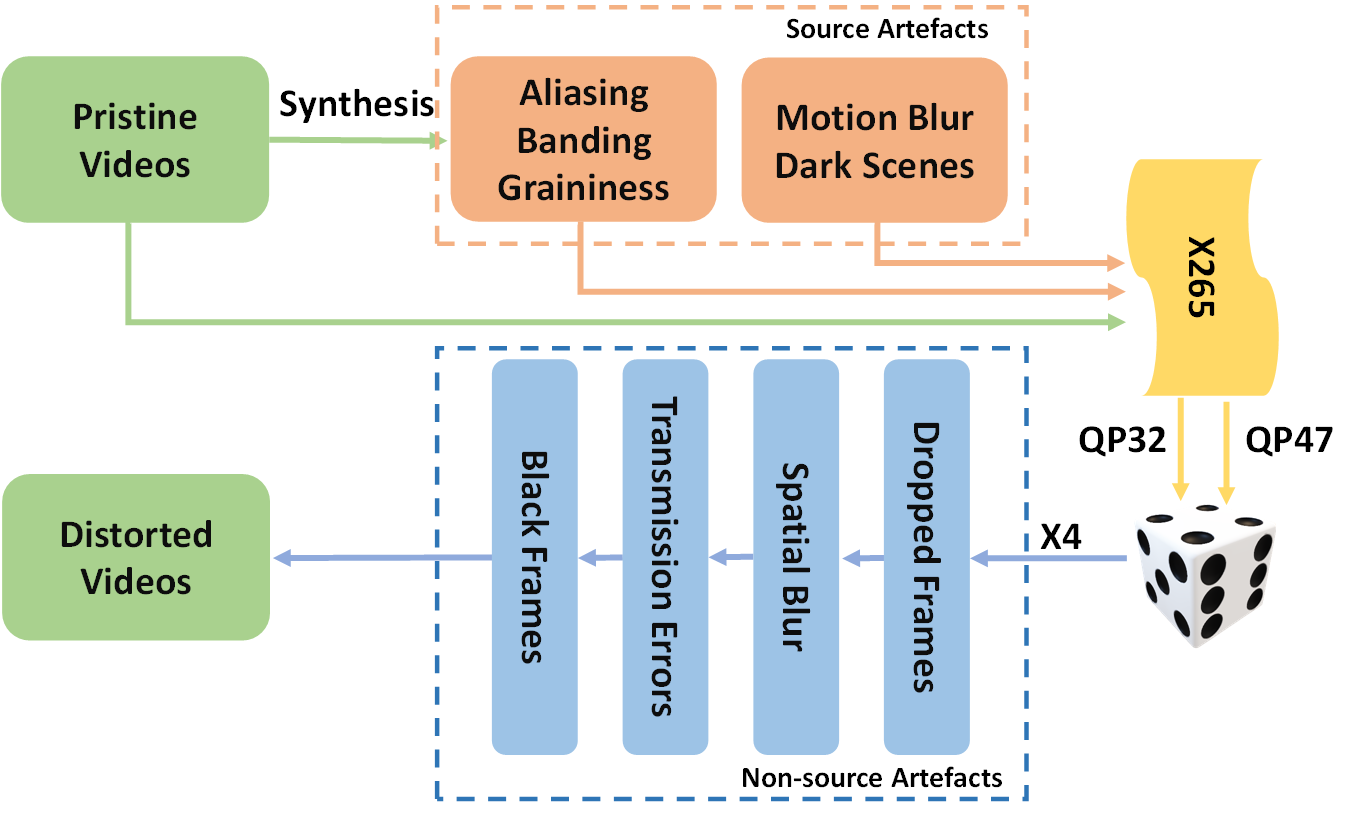

Professionally generated content (PGC) streamed online can contain visual artefacts that degrade the quality of user experience. These artefacts arise from different stages of the streaming pipeline, including acquisition, post-production, compression, and transmission. To better guide streaming experience enhancement, it is important to detect specific artefacts at the user end in the absence of a pristine reference. In this work, we address the lack of a comprehensive benchmark for artefact detection within streamed PGC, via the creation and validation of a large database, BVI-Artefact. Considering the ten most relevant artefact types encountered in video streaming, we collected and generated 480 video sequences each containing various artefacts with associated binary artefact labels. Based on this new database, existing artefact detection methods are benchmarked, with results shows the challenging nature of this tasks and indicates requirement of more reliable artefact detection methods.

Source code and Dataset

Please fill in this registration form to get access to the download link. I'll then share the download link ASAP.BVI-Artefact database

Acknowledgments

Research reported in this paper was supported by an Amazon Research Award, Fall 2022 CFP. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not reflect the views of Amazon. We also appreciate the funding from the China Scholarship Council, University of Bristol, and the UKRI MyWorld Strength in Places Programme (SIPF00006/1).

Citation

@misc{feng2023bviartefact,

title={BVI-Artefact: An Artefact Detection Benchmark Dataset for Streamed Videos},

author={Chen Feng and Duolikun Danier and Fan Zhang and David Bull},

year={2023},

eprint={2312.08859},

archivePrefix={arXiv},

primaryClass={cs.CV}}[paper]