RankDVQA: Deep VQA based on Ranking-inspired Hybrid Training

University of Bristol

About

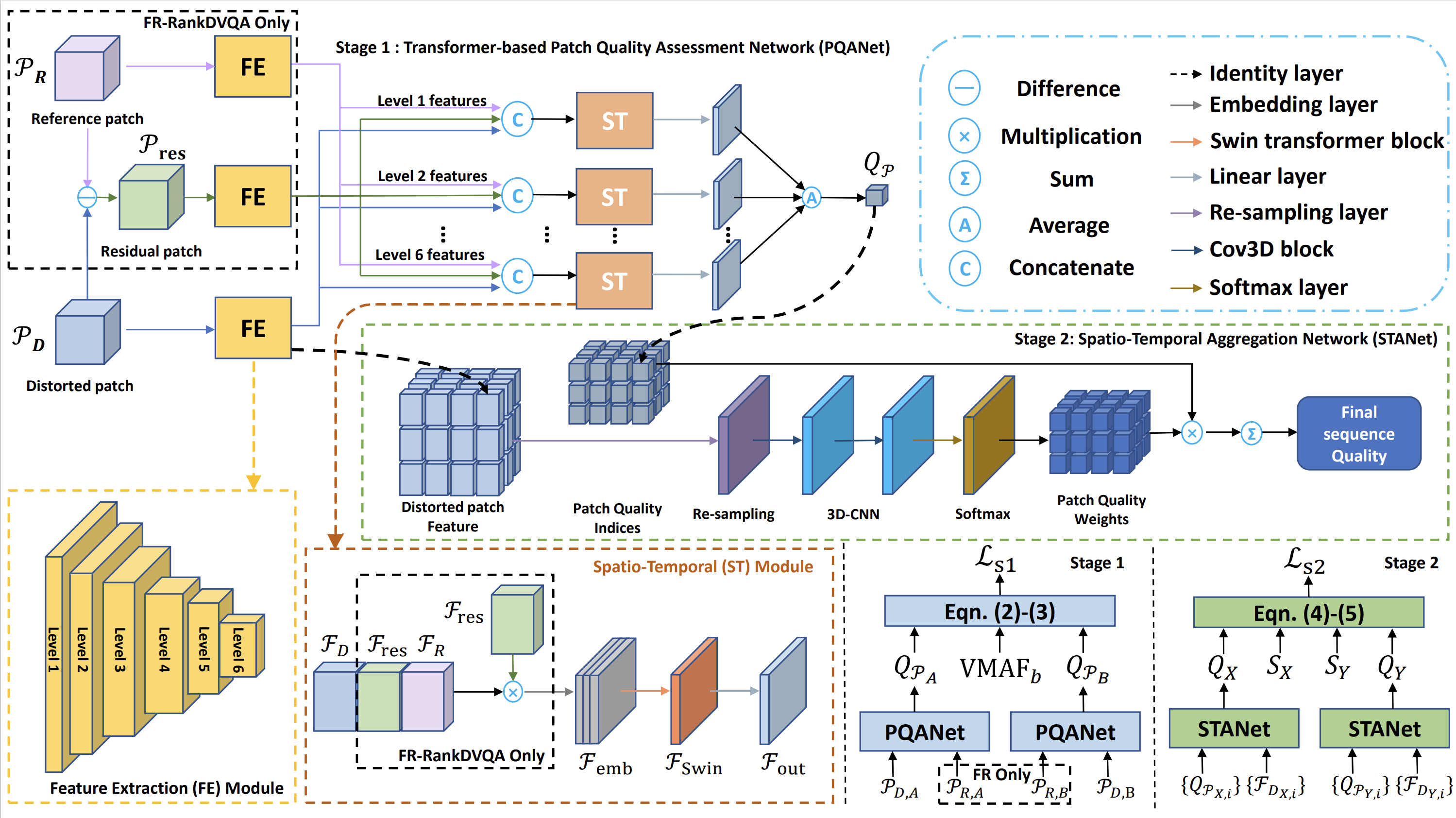

In recent years, deep learning techniques have shown significant potential for improving video quality assessment (VQA), achieving higher correlation with subjective opinions compared to conventional approaches. However, the development of deep VQA methods has been constrained by the limited availability of large-scale training databases and ineffective training methodologies. As a result, it is difficult for deep VQA approaches to achieve consistently superior performance and model generalization. In this context, this paper proposes new VQA methods based on a two-stage training methodology which motivates us to develop a large-scale VQA training database without employing human subjects to provide ground truth labels. This method was used to train a new transformer-based network architecture, exploiting quality ranking of different distorted sequences rather than minimizing the distance from the ground-truth quality labels. The resulting deep VQA methods (for both full reference and no reference scenarios), FR- and NR-RankDVQA, exhibit consistently higher correlation with perceptual quality compared to the state-of-the-art conventional and deep VQA methods, with average SROCC values of 0.8972 (FR) and 0.7791 (NR) over eight test sets without performing cross-validation.

Source code

Source code and the proposed training database have been released on GitHub.Model

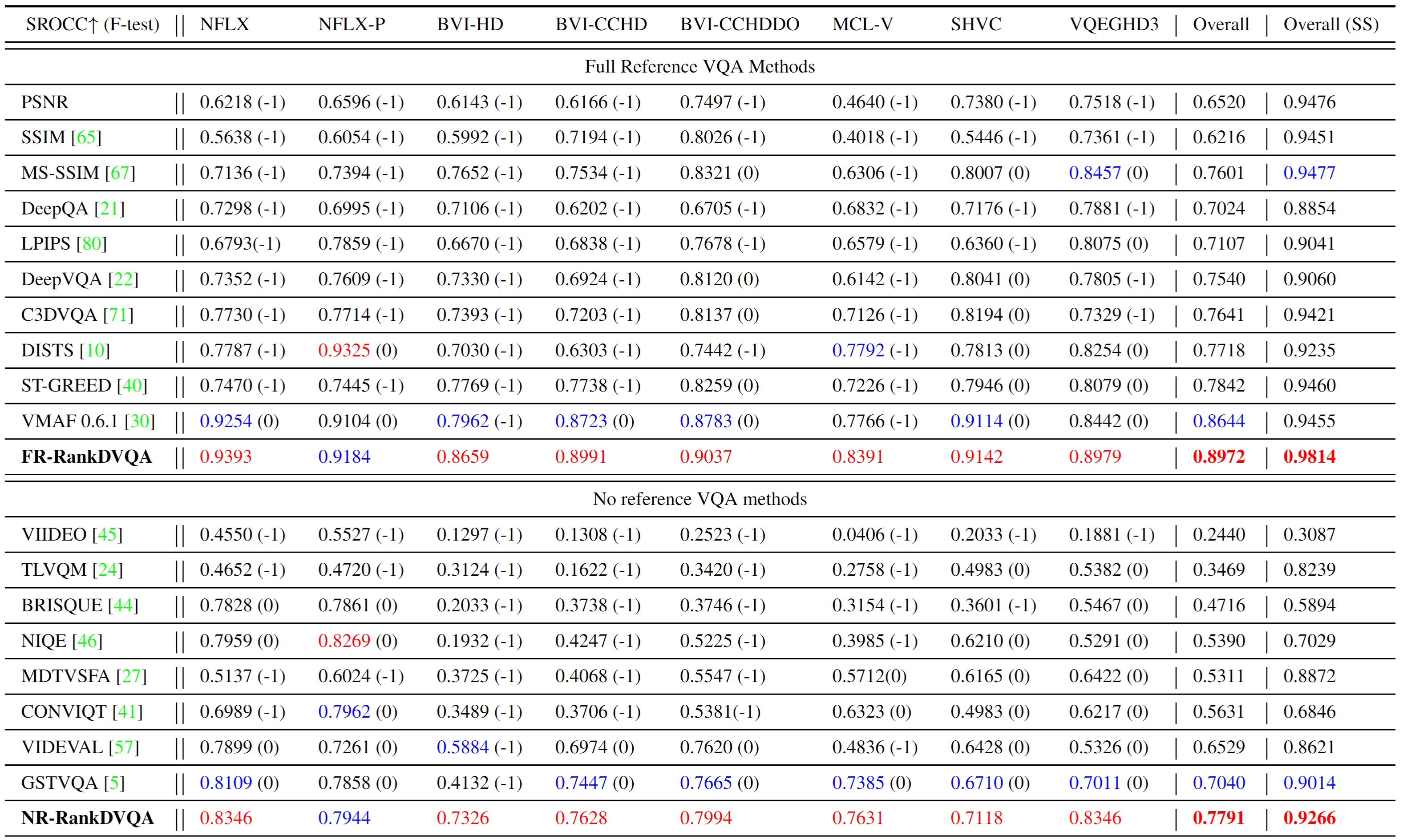

Results

Performance of the proposed methods, other benchmark approaches and ablation study variants on eight HD test databases.

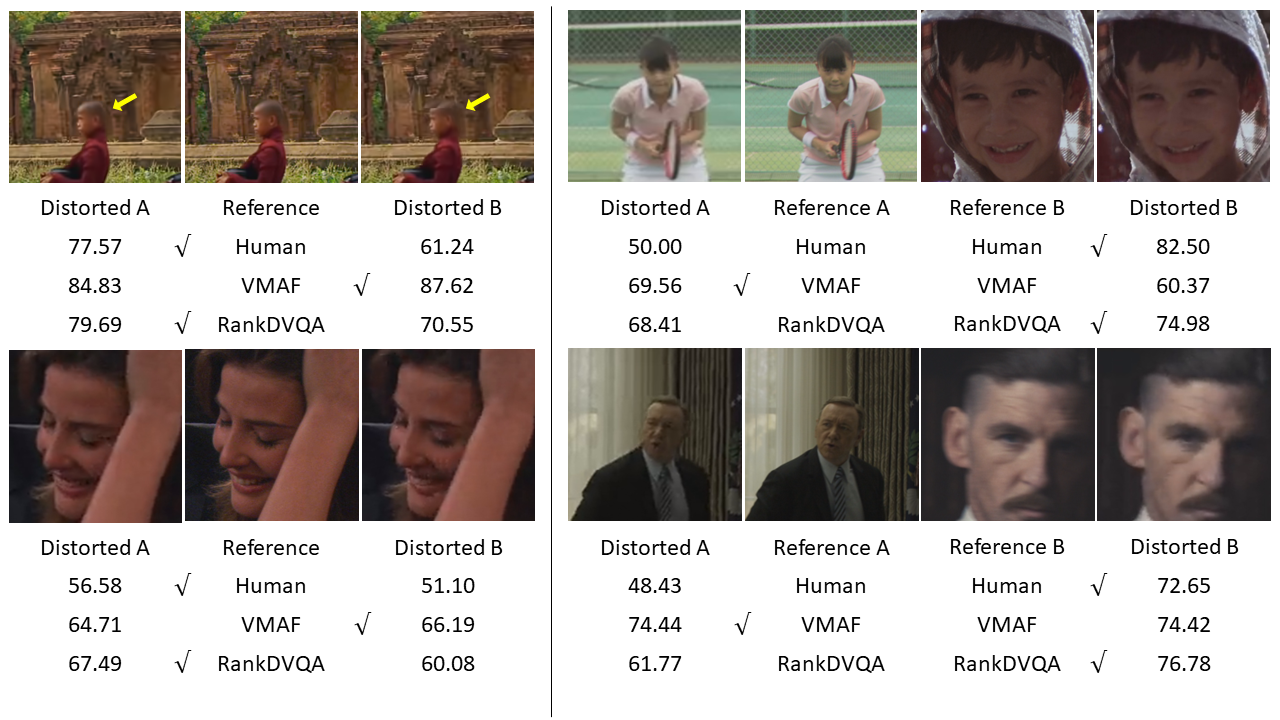

isual examples demonstrating the superiority of the proposed FR quality metric.

Citation

@InProceedings{Feng_2024_WACV,

author = {Feng, Chen and Danier, Duolikun and Zhang, Fan and Bull, David},

title = {RankDVQA: Deep VQA Based on Ranking-Inspired Hybrid Training},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month = {January},

year = {2024},

pages = {1648-1658}}

[paper]